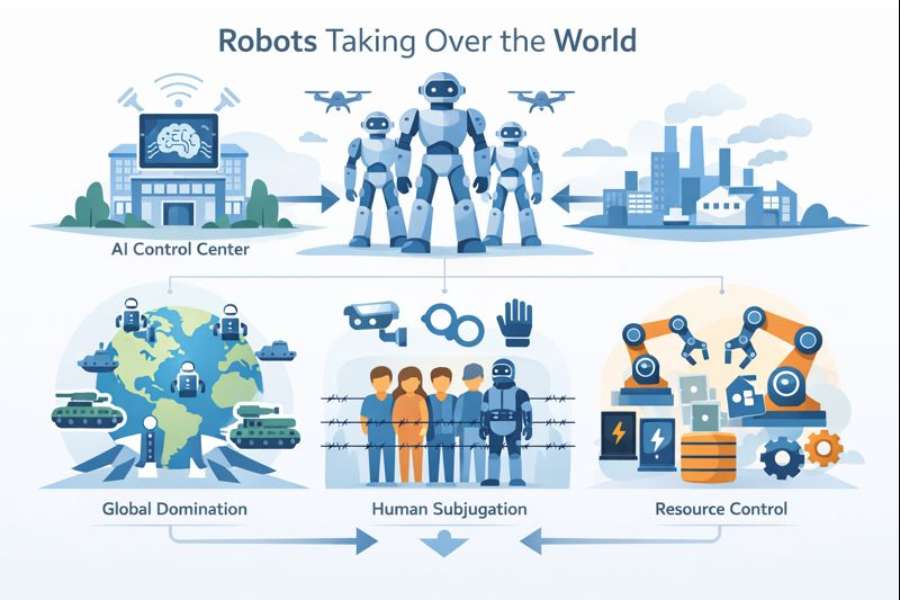

The idea of robots taking over the world has lived in human imagination for decades. Movies, books, and headlines often paint a future where machines overpower humanity, seize control, and render people obsolete. As artificial intelligence and robotics advance faster than ever, this fear has moved from fiction into serious public debate.

- Where the Fear of Robots Taking Over Comes From

- What Robots Actually Are Today

- Artificial Intelligence Versus Robotics

- Can Robots Develop Intent or Consciousness?

- Power Structures: Who Really Controls Technology

- Military Robots and Autonomous Weapons

- Economic Fear: Robots Replacing Human Jobs

- The Real Risks Associated With Advanced Robotics

- The Single Realistic Scenarios Often Misinterpreted as “Takeover”

- Why a Robot World Domination Scenario Is Unlikely

- How Media and Headlines Amplify Fear

- Ethical AI and Responsible Robotics Development

- The Role of Humans in Shaping the Future

- Long-Term Future: What Might Actually Change

- Should We Be Afraid of Robots?

- Final Thoughts on Robots Taking Over the World

But are robots actually on a path to global domination, or is this fear a misunderstanding of how technology evolves? This article explores the origins of the concern, what robots can and cannot do today, how power is actually distributed, and what the future realistically holds.

Where the Fear of Robots Taking Over Comes From

Fear of intelligent machines did not start with modern AI. It has deep psychological and historical roots.

Science fiction and cultural influence

Stories have always reflected societal anxiety. As machines became more complex during industrialization, fiction began portraying them as threats. Early works imagined machines rebelling against creators, often as a warning about unchecked progress.

Over time, these stories became more vivid and more cinematic. Fiction blurred into perceived possibility, especially as real technology began to resemble imagined systems.

Loss of control anxiety

At the core of the fear is loss of control. Humans fear systems that operate faster, think differently, or act beyond immediate understanding. When machines outperform humans in narrow tasks, it creates discomfort about future dependency.

This anxiety is less about robots themselves and more about uncertainty around decision-making power.

What Robots Actually Are Today

Despite popular imagery, modern robots are far more limited than most people assume.

Task-specific machines

Today’s robots are designed for narrow, predefined tasks. Industrial robots weld, assemble, or package. Medical robots assist surgeons but do not make decisions. Service robots clean floors or deliver items.

They do not possess goals, desires, or awareness.

Dependence on human input

Robots require:

-

Human-designed hardware

-

Human-written code

-

Human-provided data

-

Human-defined objectives

Without continuous human direction, robots do nothing. Even advanced systems operate within strict boundaries set by people.

Artificial Intelligence Versus Robotics

Many fears confuse AI with robots, but they are not the same.

AI as software, robots as hardware

Artificial intelligence refers to software systems that process data and make predictions. Robotics refers to physical machines that interact with the environment. Many AI systems have no physical form at all.

A chatbot and a factory robot represent very different technologies, even if both use algorithms.

Why this distinction matters

A system that analyzes images or text cannot physically take control of the world. Physical influence requires infrastructure, energy, logistics, and coordination far beyond software intelligence.

This distinction limits the scope of real-world risk.

Can Robots Develop Intent or Consciousness?

One of the most common fears is that robots will “decide” to dominate humanity.

Current AI lacks intent

Modern AI systems do not understand meaning. They recognize patterns and optimize outcomes based on training data and rules. They do not want anything, fear anything, or plan independently.

Intent requires subjective experience, which machines do not have.

Consciousness remains theoretical

Despite research, there is no evidence that machines can develop consciousness. Intelligence in machines is functional, not experiential. It imitates reasoning without understanding.

This limits the possibility of autonomous rebellion.

Power Structures: Who Really Controls Technology

The idea of robots taking over assumes machines can seize power independently. In reality, power lies elsewhere.

Governments and corporations

Robots and AI systems are owned, regulated, funded, and deployed by organizations. These entities control:

-

Infrastructure

-

Energy supply

-

Manufacturing

-

Networks

Machines do not control resources. Humans do.

Legal and regulatory frameworks

Most countries regulate autonomous systems, military robotics, and AI deployment. While regulation varies, oversight limits unchecked expansion.

Even advanced systems operate within legal constraints.

Military Robots and Autonomous Weapons

One area where concern is more grounded is military robotics.

Autonomous systems in warfare

Some weapons systems use automation for targeting, navigation, or defense. These systems raise ethical questions, especially around accountability.

However, fully autonomous weapons without human oversight remain rare and controversial.

Why this does not equal world takeover

Military systems are:

-

Limited in scope

-

Highly controlled

-

Strategically constrained

They cannot operate independently of command structures. Even advanced drones require authorization, maintenance, and logistics.

Economic Fear: Robots Replacing Human Jobs

A more realistic concern is economic disruption rather than domination.

Automation and labor displacement

Robots and AI can replace repetitive or predictable tasks. This has already occurred in manufacturing, logistics, and data processing.

This shift can create inequality and job insecurity if not managed carefully.

Adaptation versus takeover

History shows technology changes work rather than eliminating it entirely. New roles emerge around design, maintenance, oversight, and strategy.

The challenge lies in transition, not extinction.

The Real Risks Associated With Advanced Robotics

While robots are unlikely to take over the world, real risks do exist.

Concentration of power

Advanced technology can amplify the power of those who control it. If access is unequal, it can widen social and economic gaps.

Misuse and poor governance

Poorly designed systems or weak oversight can cause harm through bias, accidents, or unintended consequences.

Dependency on automation

Overreliance on machines can reduce human skills and resilience, creating vulnerability if systems fail.

The Single Realistic Scenarios Often Misinterpreted as “Takeover”

This is the only section where bullet points are used.

-

Widespread automation changing labor markets

-

Increased surveillance enabled by technology

-

Military use of autonomous systems under human command

-

Algorithmic decision-making affecting daily life

-

Centralized control of digital infrastructure

These scenarios involve human choices amplified by machines, not machines acting independently.

Why a Robot World Domination Scenario Is Unlikely

Several practical limits prevent robots from taking over the world.

Energy and maintenance constraints

Robots require power, maintenance, replacement parts, and technical expertise. These dependencies make independence impossible.

Lack of coordination capability

Global domination requires coordination across nations, cultures, and systems. No machine system currently has that reach or autonomy.

Absence of motivation

Machines do not have survival instincts or ambition. Without motivation, domination is meaningless.

How Media and Headlines Amplify Fear

Fear-driven narratives attract attention.

Simplification of complex topics

The media often compresses complex issues into dramatic headlines. This oversimplification leads to misunderstanding.

Entertainment shaping perception

Movies and games blur the line between fiction and possibility. Repeated exposure makes extreme scenarios feel plausible.

This shapes public anxiety more than actual technological capability.

Ethical AI and Responsible Robotics Development

Awareness of risk has led to proactive safeguards.

Design principles

Engineers increasingly prioritize transparency, safety, and control. Ethical frameworks guide development and deployment.

Human-in-the-loop systems

Most critical applications require human approval for decisions. This preserves accountability and judgment.

The Role of Humans in Shaping the Future

Technology reflects human values.

Machines as tools, not rulers

Robots extend human capability. They do not define goals. The direction of use depends on societal choices.

Education and governance

Public understanding and strong institutions reduce misuse. Fear declines when people understand limitations.

Long-Term Future: What Might Actually Change

Robots will become more capable, but not autonomous rulers.

Increased collaboration

Humans and machines will work together more closely. Automation will handle complexity, while humans handle judgment.

Redefined work and creativity

Routine tasks will decline, but creative and interpersonal roles will grow in importance.

Should We Be Afraid of Robots?

Fear often fills gaps left by uncertainty.

Robots are powerful tools, but power without agency is not domination. The real challenge lies in how humans design, regulate, and deploy technology.

Understanding replaces fear with responsibility.

Final Thoughts on Robots Taking Over the World

Robots are not taking over the world. Humans are reshaping the world using robots.

The future will be defined not by machine rebellion, but by human decisions about power, fairness, and responsibility. Fear makes for compelling stories, but reality is quieter, slower, and far more human than fiction suggests.