Artificial intelligence is transforming industries, governance, and everyday life. From autonomous vehicles to predictive healthcare and automated content creation, AI systems are reshaping social and economic frameworks at unprecedented speed. With this rapid growth comes pressing questions: how should AI be regulated, who makes the rules, what risks are regulators trying to mitigate, and what direction is U.S. policy taking?

- The Need for AI Regulation in the United States

- Federal Efforts Toward AI Regulation

- Executive actions and strategic guidance

- Legislative proposals in Congress

- Role of federal regulatory agencies

- Core Principles in U.S. AI Policy

- Accountability and liability

- Transparency and explainability

- Fairness and bias mitigation

- Privacy and data protection

- Safety and reliability

- Sector-Specific Regulatory Approaches

- Healthcare and medical AI

- Financial services

- Transportation and autonomous vehicles

- Employment and hiring systems

- Ethical Challenges Underpinning AI Regulation

- Algorithmic bias and discrimination

- Privacy and surveillance risks

- Transparency versus proprietary systems

- Human oversight and automated decision-making

- State and Local Government Actions on AI

- The Single Set of Regulatory Themes Shaping AI Policy

- Industry Self-Regulation and Standards Development

- Voluntary frameworks and certifications

- Corporate governance and ethics boards

- Public-private partnerships

- International Context: How U.S. Policy Compares

- Enforcement and Compliance Challenges

- AI Regulation and Innovation: Finding the Balance

- Regulatory flexibility and experimentation

- Encouraging competition

- Support for research and workforce development

- What Businesses Should Do Today to Prepare

- Establish governance frameworks

- Perform model impact assessments

- Invest in transparency and explainability

- Stay informed on policy developments

- Public Perception and Trust in AI Regulation

- Looking Ahead: The Future of AI Regulation in the US

- Final Thoughts on AI Regulation in the United States

This article provides a comprehensive overview of AI regulation in the United States, covering legal frameworks, federal and state action, regulatory priorities, ethical concerns, and what the future may hold for businesses, developers, and citizens.

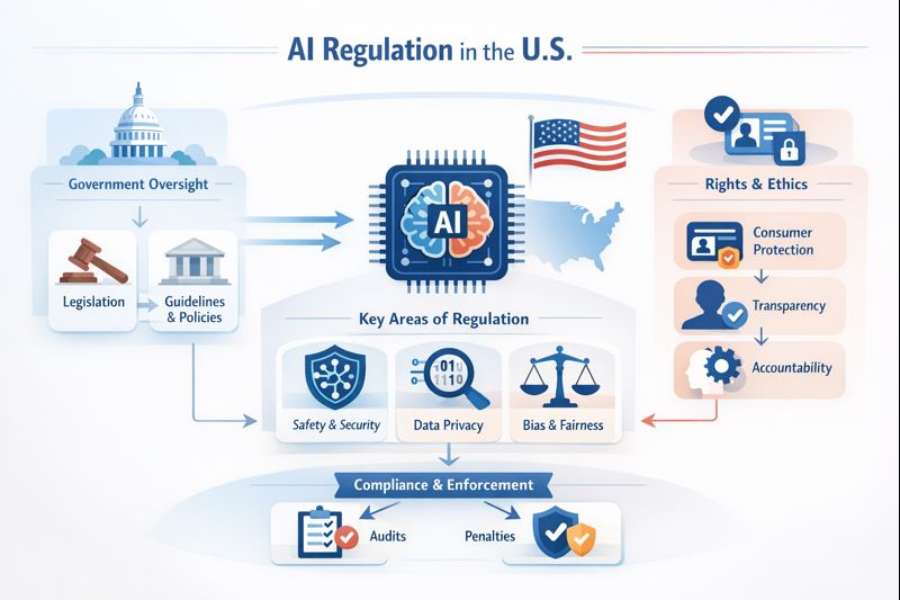

The Need for AI Regulation in the United States

AI systems affect people and institutions in complex ways. They can improve efficiency, expand access to services, and drive innovation. At the same time, they pose risks including bias, privacy harm, safety concerns, and economic disruption.

For regulators in the U.S., the priorities of AI policy include:

-

Protecting civil liberties and individual rights

-

Ensuring fairness and preventing discrimination

-

Safeguarding data privacy and security

-

Promoting transparency and accountability

-

Encouraging innovation without stifling competition

Balancing these goals is not simple. Unlike earlier technologies, AI systems operate across sectors and often make decisions that were previously made by humans. This decentralized nature complicates the regulatory picture.

Federal Efforts Toward AI Regulation

At the national level, the U.S. has taken a multi-agency approach rather than enacting a single, comprehensive AI law.

Executive actions and strategic guidance

The federal government has issued strategic frameworks designed to promote trustworthy AI development while addressing ethical and safety concerns. Executive orders and strategic documents define broad principles for responsible AI, including requirements for transparency, risk assessment, and human oversight.

These policies do not impose detailed, statutory obligations, but they set expectations for federal agencies and contractors.

Legislative proposals in Congress

Multiple bills have been introduced in Congress to address aspects of AI governance. These proposals vary widely in scope, with some focused on specific sectors such as autonomous vehicles or healthcare AI, and others pushing for broader regulatory standards.

Key themes in proposed legislation include:

-

Transparency obligations for high-risk AI applications

-

Requirements for civil rights impact assessments

-

Standards for data privacy and security

-

Risk-based oversight measures

Debate continues on how to balance regulatory certainty with flexibility so that rules remain relevant as technology evolves.

Role of federal regulatory agencies

Several U.S. agencies have authority over AI-related risks within their domains:

-

The Federal Trade Commission (FTC) has acted on deceptive or unfair AI practices under consumer protection laws, issuing guidance on algorithmic fairness and transparency.

-

The National Institute of Standards and Technology (NIST) has developed voluntary standards and frameworks to guide trustworthy AI development.

-

The Food and Drug Administration (FDA) oversees AI applications in medical devices, including continuous learning systems that update after deployment.

-

The Department of Transportation (DOT) and National Highway Traffic Safety Administration (NHTSA) are involved in regulating autonomous and semi-autonomous vehicles.

Because no single agency owns AI regulation end-to-end, coordination across agencies is a continuing policy priority.

Core Principles in U.S. AI Policy

Despite the lack of a single statute, U.S. AI policy often reflects shared principles that guide regulatory action. These principles help agencies and private sector stakeholders align expectations.

Accountability and liability

Regulators emphasize that organizations deploying AI systems must remain accountable for their outcomes. This includes mechanisms to address harms, correct errors, and provide recourse to affected individuals.

Transparency and explainability

Transparency is a recurring theme. Regulators advocate for documentation of AI development processes, clear descriptions of system limitations, and explainable outputs when systems affect important decisions.

Fairness and bias mitigation

AI systems trained on biased or incomplete data can produce discriminatory outcomes. Policies often require testing for bias, auditing algorithms, and taking corrective measures when disparities are found.

Privacy and data protection

Effective AI systems require data, often including personal information. Ensuring that data collection, processing, and sharing comply with privacy expectations is a regulatory cornerstone.

Safety and reliability

AI systems must be tested for safety, particularly in high-risk domains like healthcare, transportation, and critical infrastructure. Regulatory frameworks often treat risk categorically, imposing stricter oversight where consequences of failure are high.

Sector-Specific Regulatory Approaches

Instead of a one-size-fits-all AI law, the U.S. has developed sector-specific regulatory approaches tailored to risks in key domains.

Healthcare and medical AI

The FDA oversees AI used in medical devices and diagnostics. Its regulatory model blends traditional device approval pathways with adaptive frameworks designed to address continuous learning systems. Software that influences clinical decisions is closely evaluated for safety and effectiveness.

Regulation in healthcare also intersects with privacy laws like HIPAA, ensuring that AI systems do not compromise patient confidentiality.

Financial services

AI applications in banking, lending, and insurance are subject to financial regulations aimed at preventing discriminatory practices and protecting consumers. Regulators such as the Consumer Financial Protection Bureau (CFPB) and bank regulators issue guidance on model risk management and fairness in automated decision-making.

AI systems that affect credit scoring, loan approvals, or pricing are scrutinized for disparate impacts across demographic groups.

Transportation and autonomous vehicles

Autonomous vehicle safety is under the purview of DOT and NHTSA. Regulations focus on performance standards, testing protocols, and reporting requirements. The regulatory approach is iterative, with voluntary guidance supporting innovation while identifying minimum safety expectations.

The federal government has also encouraged states to develop consistent rules for testing and deploying autonomous vehicles.

Employment and hiring systems

AI tools used in hiring and workforce management raise concerns around discrimination and workplace fairness. While federal employment laws such as Title VII of the Civil Rights Act apply to discriminatory outcomes, regulators like the Equal Employment Opportunity Commission (EEOC) are increasingly focused on how automated systems contribute to bias.

Emerging guidance encourages transparency in hiring algorithms and proactive auditing.

Ethical Challenges Underpinning AI Regulation

Even with policy frameworks in place, ethical concerns complicate regulation. AI systems operate with consequences that reach into personal autonomy, democratic processes, and social equity.

Algorithmic bias and discrimination

AI systems trained on historical data can learn patterns that reflect past injustices. When these systems make decisions about credit, hiring, policing, or medical care, they can inadvertently perpetuate inequality.

Ethical regulation aims to identify and mitigate bias through rigorous testing, representative datasets, and continuous monitoring.

Privacy and surveillance risks

Advanced AI enables detailed pattern recognition across large populations. When coupled with data-intensive systems, this capability raises fears about pervasive surveillance. Regulation must balance public safety against civil liberties and ensure that AI does not erode fundamental privacy protections.

Transparency versus proprietary systems

While transparency is desirable for trust, companies often treat AI models as proprietary intellectual property. Regulators must find ways to require sufficient explainability without undermining innovation incentives.

Human oversight and automated decision-making

As AI systems take on more responsibility, questions arise about human oversight. When should humans intervene? How should accountability be shared between humans and machines? Ethical frameworks are evolving to answer these questions in different contexts.

State and Local Government Actions on AI

In the absence of comprehensive federal legislation, states and municipalities have taken their own regulatory actions.

State privacy laws

States like California, Virginia, and Colorado have enacted data privacy laws with provisions relevant to automated decision-making. These laws require transparency around profiling, automated processing, and explainability when decisions significantly affect individuals.

Local bans and moratoria

Some local governments have implemented bans or temporary moratoria on specific AI deployments. For example, a few cities paused the use of facial recognition by law enforcement amid concerns about accuracy and racial bias. These actions reflect a cautious, iterative approach to technologies that may affect civil liberties.

Policy innovation at the state level

States also serve as laboratories for policy innovation. Some are exploring AI impact assessments or public oversight councils to review the use of AI in government services. These initiatives offer models for balancing innovation with community safeguards.

The Single Set of Regulatory Themes Shaping AI Policy

This is the only section where bullet points are used.

-

Risk-based oversight, focusing stricter regulation on high-impact use cases

-

Transparency expectations, requiring developers to explain how systems work

-

Fairness and nondiscrimination mandates, addressing bias in outcomes

-

Data protection standards, aligning with privacy laws and ethical norms

-

Human accountability, emphasizing human review and control

-

Multi-agency coordination, ensuring consistent policy across sectors

-

International alignment, participating in global discussions on AI governance

These themes drive regulatory priorities across agencies, states, and industry standards.

Industry Self-Regulation and Standards Development

Given the complex and rapidly evolving nature of AI, industry groups and standards organizations play a major role in shaping responsible practices.

Voluntary frameworks and certifications

Organizations such as the Institute of Electrical and Electronics Engineers (IEEE), International Organization for Standardization (ISO), and NIST have developed voluntary frameworks that define criteria for trustworthy, ethical, and safe AI.

These standards help companies establish internal guardrails and demonstrate commitment to best practices.

Corporate governance and ethics boards

Many technology firms have created internal AI ethics boards, risk committees, and review processes to assess the impact of their systems. While self-governance does not replace government regulation, it complements policy by encouraging responsible innovation ahead of legal mandates.

Public-private partnerships

Collaboration between regulators and industry stakeholders helps shape pragmatic policy. Public-private initiatives often produce guidance that is better aligned with technical realities and business conditions.

International Context: How U.S. Policy Compares

AI governance is a global issue. Other regions have pursued more centralized regulatory approaches.

The European Union’s AI Act

The EU has taken a more prescriptive path with the AI Act, categorizing AI systems by risk and imposing detailed requirements based on those categories. The law includes obligations for high-risk systems, monitoring frameworks, and enforcement mechanisms.

While the U.S. model remains decentralized and principle-based, the EU’s approach offers an alternative model for comprehensive AI governance.

International standards and cooperation

Global forums such as the G7, OECD, and United Nations work on principles and frameworks to align AI governance across borders. International alignment helps companies operating globally and strengthens cross-border safeguards against harm.

U.S. engagement in these efforts influences domestic policy and ensures American interests are represented in global norms.

Enforcement and Compliance Challenges

Effective regulation requires mechanisms for enforcement and compliance. In the U.S., this responsibility is distributed across agencies with different powers and tools.

Regulatory enforcement

Agencies like the FTC use existing consumer protection laws to act against unfair or deceptive practices involving AI. Enforcement actions can include fines, corrective orders, and oversight requirements.

However, agencies face resource constraints and must balance enforcement with guidance and education.

Compliance within organizations

Businesses must build internal compliance systems that monitor AI practices, assess risk, document decisions, and respond to regulatory requirements. This often requires cross-functional teams that include legal, technical, and ethical expertise.

Accountability challenges

Assigning responsibility when AI systems cause harm can be difficult. Determining whether liability rests with developers, deployers, or third-party model providers is an ongoing legal and policy debate.

AI Regulation and Innovation: Finding the Balance

A key concern in shaping AI policy is avoiding stifling innovation while protecting public interest.

Regulatory flexibility and experimentation

Flexible, risk-based regulatory models allow innovation to continue while imposing stricter oversight where necessary. Regulatory sandboxes and pilot programs enable controlled experimentation.

Encouraging competition

AI regulation also intersects with competition policy. Preventing monopolistic behavior and ensuring access to critical data and infrastructure are part of broader innovation strategies.

Support for research and workforce development

Regulatory frameworks that support research, talent development, and ethical AI education contribute to a thriving innovation ecosystem.

What Businesses Should Do Today to Prepare

Companies working with AI systems should proactively align with regulatory expectations, even in the absence of explicit law.

Establish governance frameworks

Develop internal policies for AI ethics, risk assessment, documentation, and review. These frameworks help demonstrate diligence and reduce risk.

Perform model impact assessments

Before deploying systems with real-world impact, assess potential harms related to bias, privacy, safety, and fairness. Use documented assessments to guide decision-making.

Invest in transparency and explainability

Provide clear information to users and stakeholders about how AI systems work, what data they use, and what limitations exist. Transparency builds trust and reduces regulatory friction.

Stay informed on policy developments

U.S. AI policy continues to evolve. Businesses should track proposed legislation, agency guidance, and state actions to ensure ongoing compliance.

Public Perception and Trust in AI Regulation

Regulation affects not only businesses and developers but also public confidence. Trust in AI hinges on responsible governance, meaningful transparency, and visible accountability.

When regulation is perceived as weak or fragmented, public skepticism grows. When regulation aligns with societal values and addresses real risks, trust increases and adoption accelerates. Public education about AI capabilities and limitations is part of building informed consent and democratic oversight.

Looking Ahead: The Future of AI Regulation in the US

U.S. AI regulation is likely to become more structured over time. Emerging priorities include:

-

Comprehensive federal legislation that codifies core standards

-

Stronger enforcement mechanisms with clear penalties

-

Expanded coverage of high-risk domains such as hiring, lending, and public services

-

Greater interstate coordination to reduce regulatory fragmentation

-

Enhanced alignment with international frameworks

Policymakers, industry leaders, and civil society will continue to shape this landscape through debate, experimentation, and incremental progress.

Final Thoughts on AI Regulation in the United States

AI regulation in the U.S. is a work in progress, shaped by competing priorities that include innovation, fairness, safety, and economic growth. The current approach blends federal strategy, agency action, state experimentation, and industry self-regulation. While challenges remain, including coherence and enforcement, the regulatory landscape is evolving toward greater clarity and accountability.

For businesses, understanding regulatory trends and proactively adopting responsible practices is essential. For citizens, informed engagement and public discourse contribute to policy outcomes that reflect societal values.

AI regulation is not static. It will continue to adapt as technology, markets, and social expectations evolve.